AI Prompt Action (Multi-LLM)

This action is suited to execute LLM-prompts from multiple AI Providers, including on-prem once based on Ollama.

Actually it supports the following LLM providers and models

Supported LLM providers and models

Cohere

- command-r

- command-r-plus

- gemini-pro

Anthropic

- claude-3-5-sonnet

Groq

Grouped by "creator"

Meta (LLaMA)

- llama-3.2-11b-vision-preview

- llama-3.1-8b-instant

- llama-3.2-3b-preview

- llama-3.2-90b-vision-preview

- llama-guard-3-8b

- llama3-8b-8192

- llama-3.2-1b-preview

- llama-3.3-70b-versatile

- llama3-70b-8192

- llama-3.3-70b-specdec

DeepSeek / Alibaba Cloud

- deepseek-r1-distill-qwen-32b

- deepseek-r1-distill-llama-70b

Alibaba Cloud

- qwen-2.5-32b

- gemma2-9b-it

SDAIA

- allam-2-7b

Mistral AI

- mixtral-8x7b-32768

Ollama

- User defined models

How to configure and use the action

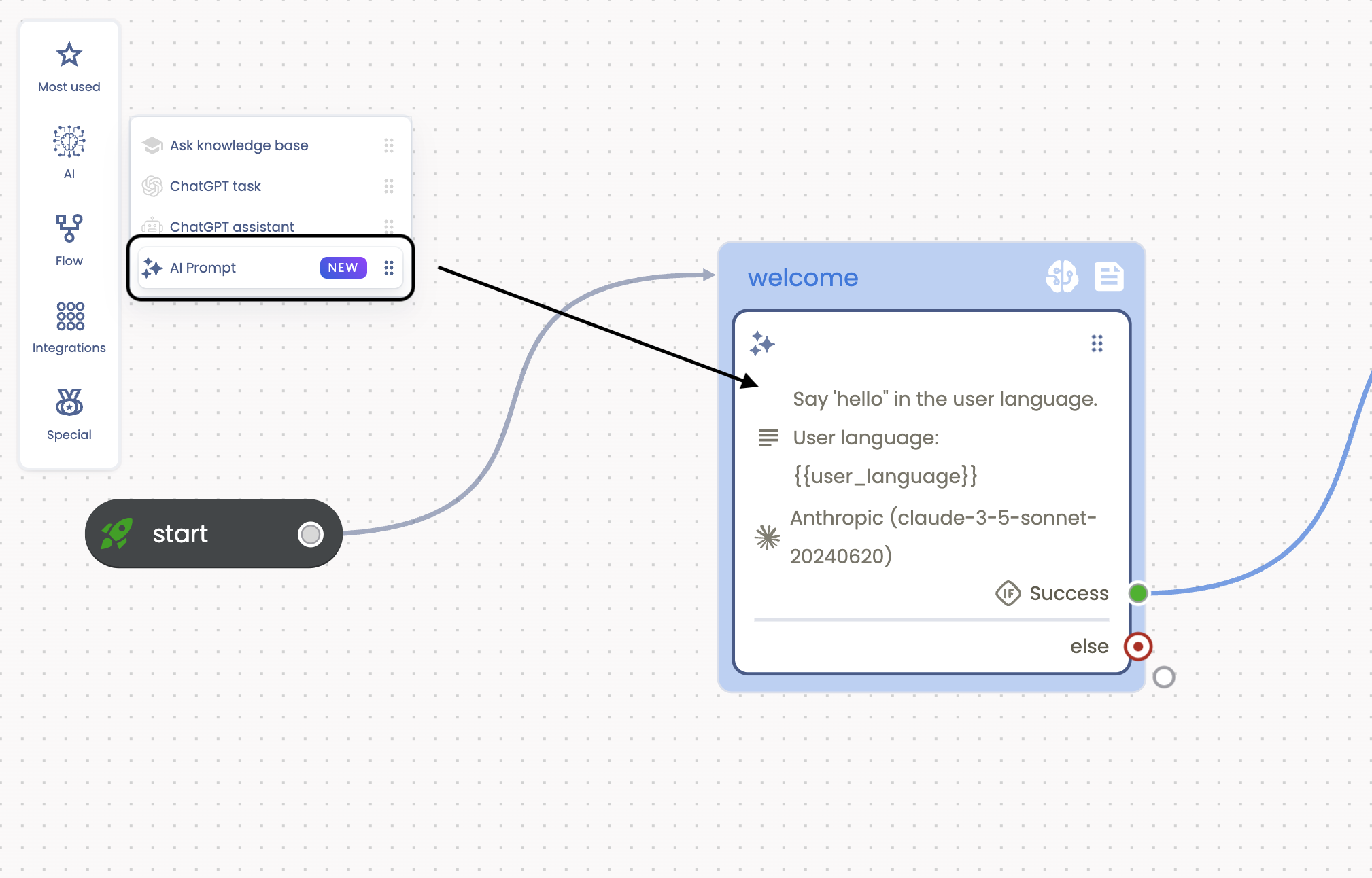

Drag & Drop the Action from the actions palette on the left to the stage:

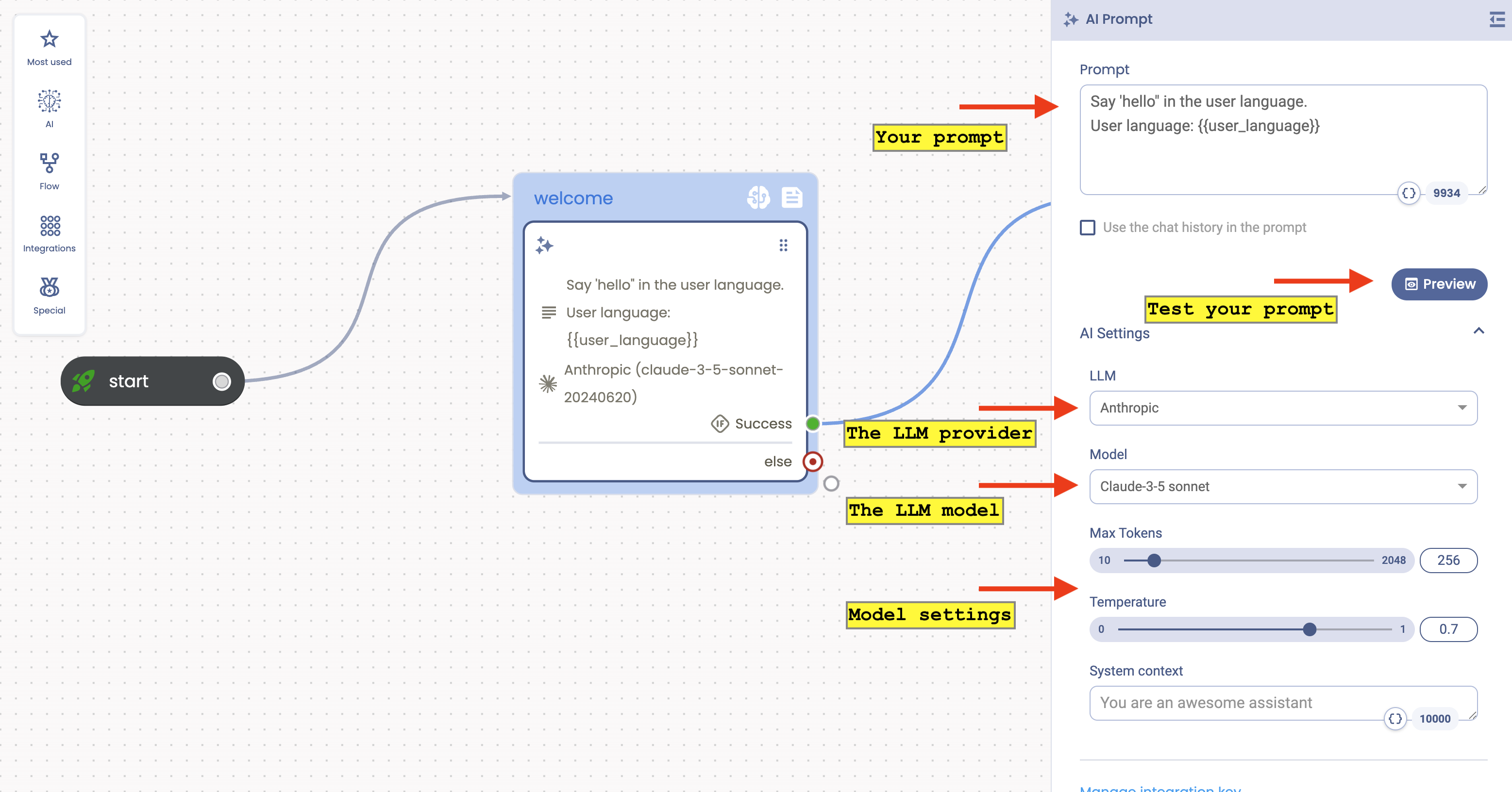

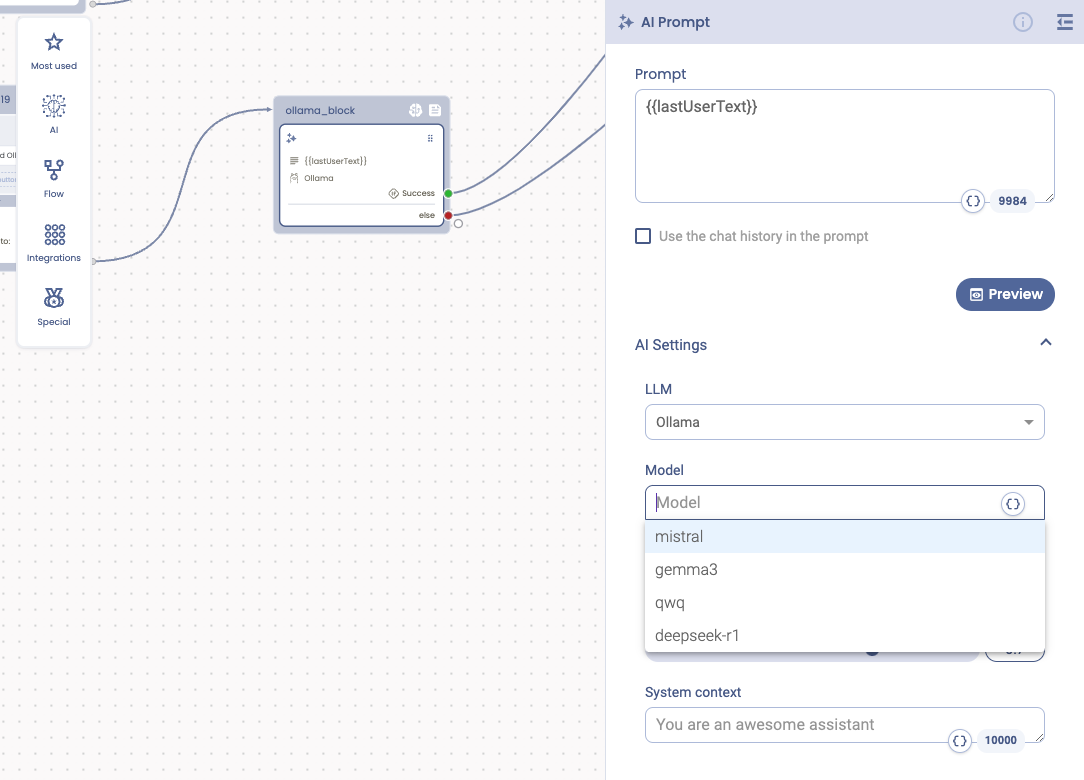

Once on the stage you can configure your favourite LLM prompt using the action detail panel.

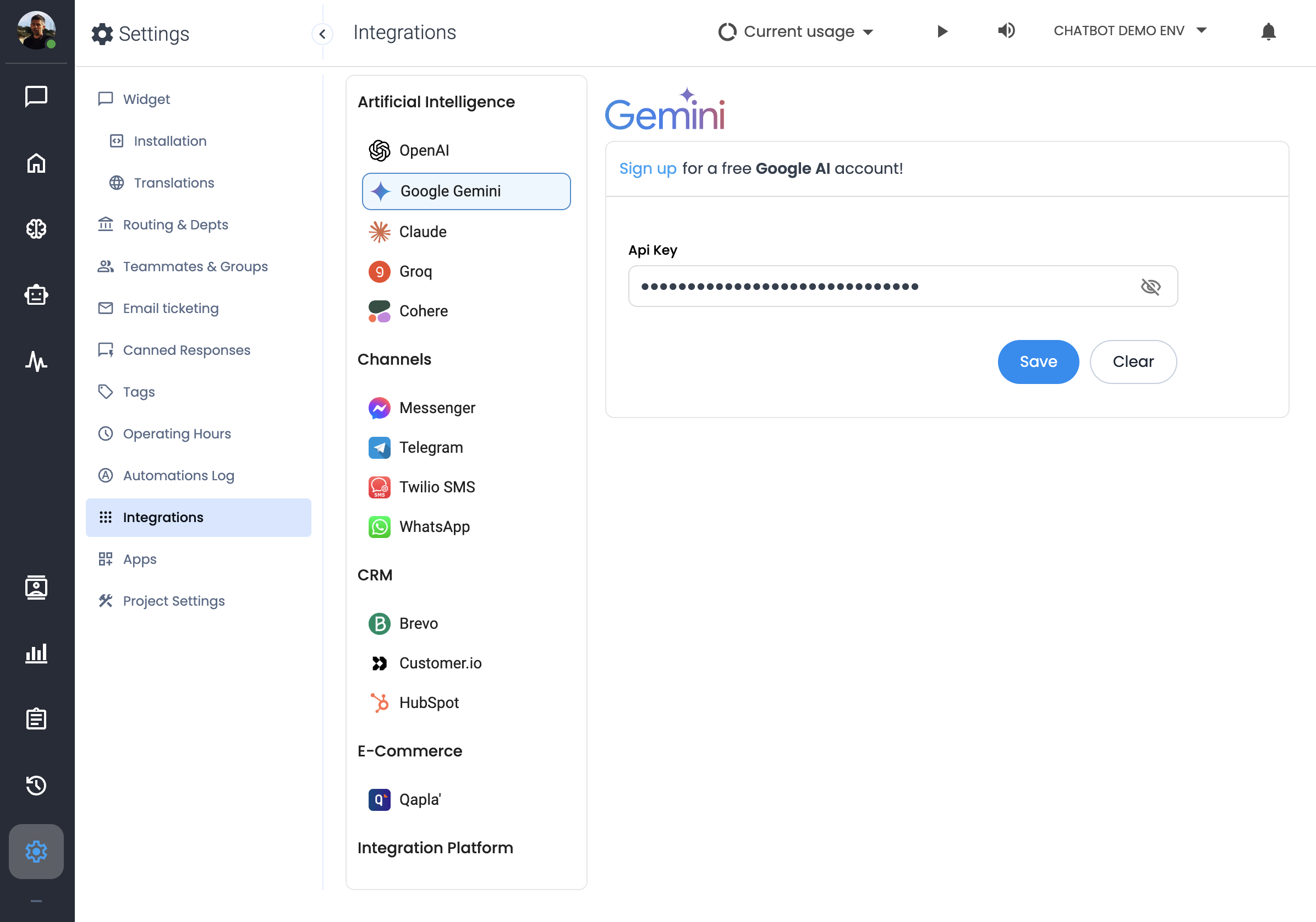

To properly use you Action you need to configure it in the Settings > Integrations section, provinding the corresponding LLM API-KEY.

E.g. for Google Gemini:

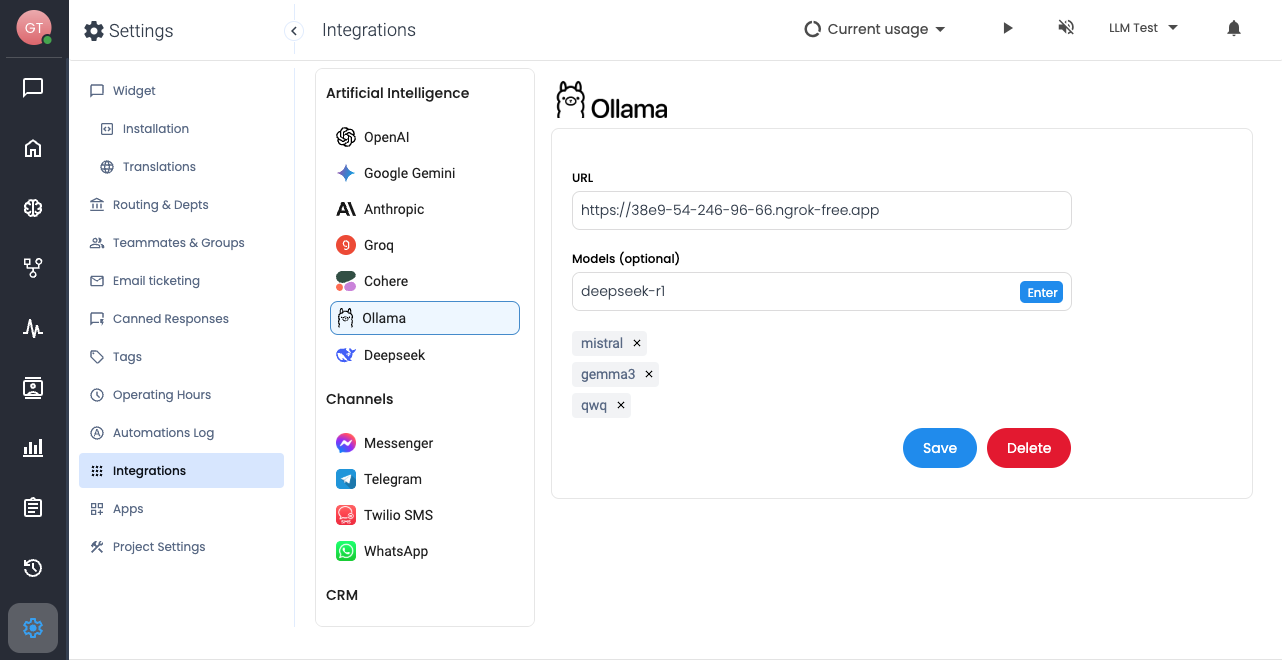

Using Ollama with Action PromptAI

As with others LLMs, in order to use Ollama with the PromptAI action, you need to configure the Ollama Integration on Tiledesk by going to Settings → Integrations, entering:

- The URL of the machine where Ollama is running

- (Optional) Your favorite models to use for a faster action configuration

To add a model to your Favorites List, type the exact model name and press the Enter button. Finally save the settings clicking on Save button.

In the PromptAI action, select Ollama as LLM and choose a model between the predefined favorite models

We hope you enjoy our new Action that will let you use your favourite LLM provider and models!

If you have questions about the AI Prompt Action or other Tiledesk features feel free to send us an email to support@tiledesk.com or leave us a feedback

.png)